Wouldn't it be an astonishing spell for you if someone told you the emotions of others - be it your customers, students, or employees?

Think of, you know what’s in their mind but you won’t need to ask for that. And, instead of misinterpreting one’s sentiment or waiting for their open communication, you come up with solutions and surprise them.

Sounds too good? Right!

Since emotional analytics are at your disposal, yes, you can do this all without giving a second thought.

But for that, you must try an exceptional emotional analysis API and integrate it into your preferred device.

However, before focusing on who you could benefit from, let’s have a detailed discussion on every corner of the emotional analysis API!

What is the Emotion Analysis API?

An emotion analysis API acts as a contract between two applications to identify, extract, and interpret human emotions with its advanced mechanism of machine learning and natural language processing.

By analyzing data inputs — such as images, audio, or text — these APIs understand sentiments and categorize them into seven basic emotional states such as joy, anger, fear, sadness, surprise, disgust, and neutral.

Thus, these APIs fill the gap between human interactions and machine responses, allowing applications to react in more human-centered ways.

And, today its uses are much broader than hardly anyone could think a couple of years ago as it covers areas likely, marketing, education and e-learning, healthcare, e-commerce, tourism, etc.

However, let me give you a real-time use case that actually determines why emotion analytics are important, after which you may think about its utilization through an emotional analysis API.

The giant entertainment company, Disney, uses emotion analysis technology to capture real-time responses of their audience, instead of using traditional survey-based feedback. In theaters, they capture audiences’ facial expressions through infrared cameras and articulate analysis by using those data points. Therefore, they gain a handful of insights into whether their effort and investment in a movie are truly appreciated or not.

See how Disney works smarter without going an extra mile of direct conversation, and you can do the same.

How do Emotion Analysis APIs Work?

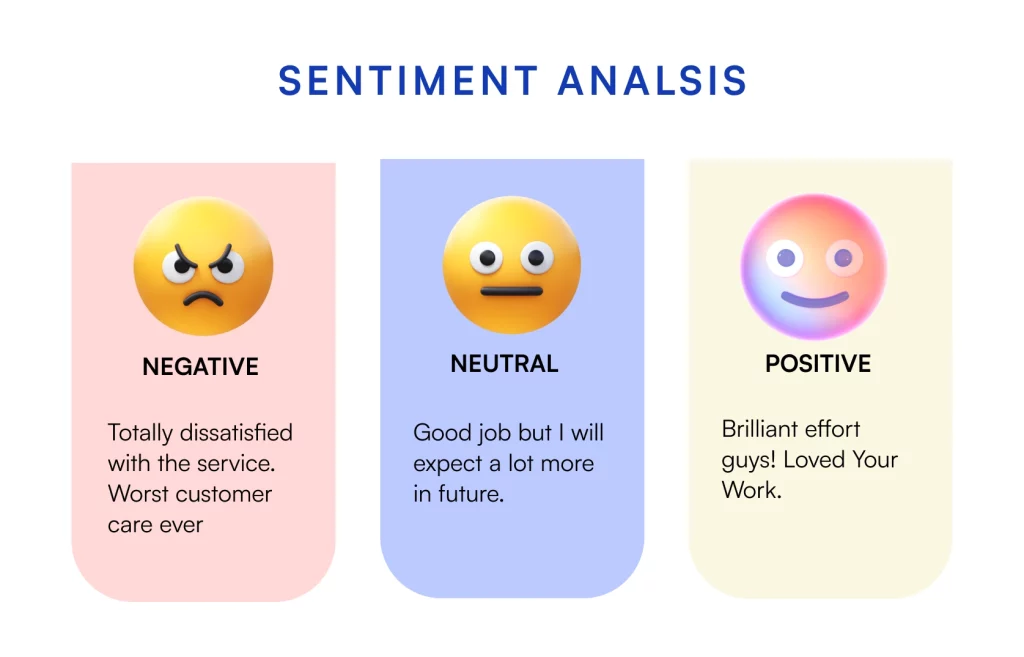

To recognize human emotional states, an AI-powered emotion analysis algorithm classifies sentiments into three mathematical categories — positive, negative, and neutral. Generally, the output numbers fall between -1 to +1, where

- -1 indicates negative emotion

- 0 is the sign of neutral, and

- +1 points toward positive sentiment

Now, these categorizations are based on the concept of either 'on a scale' or 'probability' to provide responses with high accuracy. Let’s say, if the number is .7, it would be taken as positive as it's closer to +1 than 0, whereas, the output based on probability indicates negative sentiment. And, the probability is nothing but multiclass classification, such a case where 25% assurance for both positive and neutral but 50% negative sentiment.

Think of your customers’ feedback and their emotional expressions, to understand these sentiment variations more easily by looking at the below-mentioned picture.

And, to make the AI work, first you need to integrate an emotion detection API, which has gone through several steps to reach the accurate output. And, here’s are those steps:

Data collection

First, this API collects data from various sources, such as facial images, voice recordings, or text inputs. For visual data, it uses facial recognition to detect expressions, while for audio, it focuses on tonal analysis. And, text inputs identify emotional cues based on language patterns.

Feature extraction

Next, the API extracts specific features that correlate with emotions. For instance, in facial recognition, the API might focus on facial muscle movement, eye movements, or smiles. For audio, pitch, volume, and speed can reveal emotions like anger or calmness. In text, emotional tone understanding is done through word choices and sentence structure.

Emotion classification

Then the API interprets the extracted features and assigns emotions to the input data. Advanced APIs can detect even subtle emotions, such as confusion or excitement, through micro-expressions or vocal nuances.

Accurate response

Finally, the API provides an output in the form of emotion labels, sentiment scores, or mood indicators. This information can then be integrated into applications for further action, such as real-time customer service adjustments, personalized marketing, or user feedback for self-help apps.

Tips to Choose the Best Emotion Analysis API

While a choice based on assumptions costs you heavily, a mindful decision can save you from drowning in the sea of losses, that’s why, you should consider essential facts while looking for an emotion detection API. And, here they’re:

Set your objectives first

When choosing an Emotion Analysis API, it’s essential to match the tool with your specific objectives. Start by defining what you want to achieve.

For example, if your goal is to enhance customer support with real-time sentiment tracking, look for an API that excels in analyzing live data. Clarifying your objectives will streamline your search and ensure the API meets your needs.

Measure accuracy vs performance

Next, weigh accuracy against performance. Some APIs are highly accurate but may require substantial computing power or take longer to process emotions.

For example, a customer expresses gratitude for your assistance during a support interaction, saying, "Thanks a bunch for your help." However, their tone of voice and body language convey a sense of frustration and dissatisfaction.

If your integrated emotion analysis API lacks this contextual understanding, it won't differentiate scenarios. Therefore, a high-accuracy API may be overkill for applications where near-perfect emotion detection isn’t essential, so choose based on your unique needs.

Check the real-time capability of data analysis

Real-time analysis capability is another key factor. Not all APIs can process emotions on live data streams; some perform better in post-analysis scenarios. If real-time feedback and reporting are crucial, especially in user interaction contexts, verify that the API can handle continuous input without delays or lags.

Choose the one that aligns with your end goals

Finally, look at factors like scalability, integration options, cost, customer support, and long-term compatibility. The right emotion detection API should be easy to integrate with your existing tech stack and flexible enough to match your business growth. Making a deliberate choice can be more meaningful not only to your business as well as your internal tech team.

Some Emotion Detection APIs that Work on Facial Recognition

There are many APIs that blend psychology with technology, but to save you from giving extra effort to find those, we've listed some of the best emotion analysis APIs that work on facial recognition. Let’s have a look below:

Lystface

Lystface API is a facial recognition-based technology that analyzes specific facial expressions, subjects’ facial coordinates, ages, and positions to compare those and authenticate their liveliness.

This API could be a developer favorite due to its easy installation process, free trial, and plug-in and out compatibility and businesses' top choice due to the lower costs. It looks newly supported with a growing community with transparent API documentation for its Face Recognition.

And, if we focus on its real-time use cases, it caters to every kind of organization as well as non-commercial places, where location-based facial authentication is a must to ensure security at its best.

Luxand.cloud

Luxand.cloud API's technology allows your developers to integrate facial recognition and biometric features that span a wide range of functionalities, from detecting faces in both images and video to identifying users based on distinct facial characteristics.

In addition, it offers emotion recognition through facial expression analysis, age and gender estimation, face landmark detection, and even creative features like face animation and morphing. A significant advantage of their platform is the inclusion of ready-to-use code samples, which simplify the integration process, making it more accessible.

Imentiv

Imentiv offers an advanced emotion recognition API powered by built-in AI, enabling seamless sentiment analysis for both image-based and video-based applications. This technology easily identifies dominant emotions, whether from static images or live motion, making it highly adaptable across various use cases.

Its integration is particularly valuable in fields like advertising, marketing, and psychological research, where understanding emotional responses is essential to enhancing user experiences and gathering insightful data.

Emotiva

Emotiva’s API captures real-time emotional responses through emotion detection, head pose tracking, and the extraction of facial action units. Designed for accuracy and reliability, this API offers seamless integration, scalability, and robust data security.

Its versatile applications span market research, media analysis, and user engagement optimization, making it suitable across a wide array of industries that benefit from deeper emotional insights.

SmartClick

SmartClick’s AI-driven emotion detection API leverages facial expression analysis to accurately identify different human emotions. Built on the PyTorch library, this advanced model allows for real-time emotion recognition, making it versatile for a range of applications.

This API is well-suited for diverse scenarios such as ad performance testing, analysis of entertainment content, and enhanced surveillance monitoring, providing valuable insights by detecting audience or user emotions in real-time.

In the Future the Applications of Emotion Analysis API will be on Surge

Emotion analysis is continually evolving. There were days when we understood one’s emotions only when having an in-person interaction, but now, this is taken over by machine-derived emotional intelligence. As discussed earlier, this emotional analysis API caters to every stage, from image to video to text.

However, in the years ahead, it’ll be more centralized toward facial recognition-based sentiment analysis, whose market already reached $6.3 billion in 2023 and is expected to achieve $13.4 billion in 2028. Because it can smoothly analyze human emotions by processing images, videos, and liveness detections.

So, if you want to be on the trend, be future-ready from today while tapping on integrating facial recognition API with Lystface.